This blog allows me to disseminate knowledge I think should be readily available. If I find myself ranting, I try to make sure it's informative, and not completely subjective. I try to offer solutions--not problems--but in the case I do present a problem, please feel free to post a comment.

Wednesday, December 27, 2006

Bragging Rights

In sort of an aimless manner, I ended up having a software RAID partition with an 899GB capacity. I don't know if I'll keep this configuration, but it's very fast and I can't imagine running out of space. I just hope it's not drawing too much power. I was pretty floored by the following responses from Linux:

/proc/cpuinfo:

processor : 0

vendor_id : GenuineIntel

cpu family : 6

model : 15

model name : Intel(R) Core(TM)2 CPU 6600 @ 2.40GHz

stepping : 6

cpu MHz : 1596.000

cache size : 4096 KB

physical id : 0

siblings : 2

core id : 0

cpu cores : 2

fdiv_bug : no

hlt_bug : no

f00f_bug : no

coma_bug : no

fpu : yes

fpu_exception : yes

cpuid level : 10

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe nx lm constant_tsc pni monitor ds_cpl vmx est tm2 cx16 xtpr lahf_lm

bogomips : 4811.97

processor : 1

vendor_id : GenuineIntel

cpu family : 6

model : 15

model name : Intel(R) Core(TM)2 CPU 6600 @ 2.40GHz

stepping : 6

cpu MHz : 1596.000

cache size : 4096 KB

physical id : 0

siblings : 2

core id : 1

cpu cores : 2

fdiv_bug : no

hlt_bug : no

f00f_bug : no

coma_bug : no

fpu : yes

fpu_exception : yes

cpuid level : 10

wp : yes

flags : fpu vme de pse tsc msr pae mce cx8 apic mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe nx lm constant_tsc pni monitor ds_cpl vmx est tm2 cx16 xtpr lahf_lm

bogomips : 4805.23

Now, my Pentium 4 3.2GHz processor gave me 6,400 bogomips for each CPU, but we all know that only approximately 6,400 operations per second can occur. In other words, the second CPU is virtual and only gets work done when the 31-stage Pentium 4 pipeline would otherwise be empty. These two 4,800 bogomips readings can occur simultaneously, allowing approximately 9,600 bogomips for the whole system.

/proc/meminfo:

MemTotal: 2074924 kB

MemFree: 1084092 kB

Buffers: 32624 kB

Cached: 673980 kB

SwapCached: 0 kB

Active: 481828 kB

Inactive: 454244 kB

HighTotal: 1179264 kB

HighFree: 264560 kB

LowTotal: 895660 kB

LowFree: 819532 kB

SwapTotal: 4095992 kB

SwapFree: 4095992 kB

Dirty: 42252 kB

Writeback: 0 kB

AnonPages: 229628 kB

Mapped: 55352 kB

Slab: 35120 kB

PageTables: 4552 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

CommitLimit: 5133452 kB

Committed_AS: 602780 kB

VmallocTotal: 114680 kB

VmallocUsed: 7012 kB

VmallocChunk: 107160 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

Hugepagesize: 4096 kB

Filesystem Size Used Avail Use% Mounted on

/dev/md2 899G 3.2G 849G 1% /

/dev/md0 99M 10M 84M 11% /boot

tmpfs 1014M 0 1014M 0% /dev/shm

192.168.0.5:/home 360G 249G 94G 73% /home

/dev/hde 3.3G 3.3G 0 100% /media/FC_6 i386 DVD

There are two 500GB SATA 3.0GB/s Seagates!

[root@localhost ryan]# /sbin/hdparm -t /dev/md2

/dev/md2:

Timing buffered disk reads: 426 MB in 3.01 seconds = 141.61 MB/sec

[root@localhost ryan]# /sbin/hdparm -t /dev/md2 ; /sbin/hdparm -t

/dev/md2; /sbin/hdparm -t /dev/md2

/dev/md2:

Timing buffered disk reads: 434 MB in 3.01 seconds = 144.34 MB/sec

/dev/md2:

Timing buffered disk reads: 434 MB in 3.00 seconds = 144.60 MB/sec

/dev/md2:

Timing buffered disk reads: 434 MB in 3.01 seconds = 144.26 MB/sec

[root@localhost ryan]# /sbin/hdparm -T /dev/md2 ; /sbin/hdparm -T

/dev/md2; /sbin/hdparm -T /dev/md2

/dev/md2:

Timing cached reads: 5120 MB in 2.00 seconds = 2565.42 MB/sec

/dev/md2:

Timing cached reads: 5152 MB in 2.00 seconds = 2580.52 MB/sec

/dev/md2:

Timing cached reads: 5060 MB in 2.00 seconds = 2533.59 MB/sec

Tuesday, December 12, 2006

Nightly Backup Script

Obviously, you'll have to change the file and directories. This is just an example.

[ryan@a564n cmpe646]$ cat cmpe646_backup.sh

#!/bin/sh

date=$(date +%s)

tar -cf /home/cmpe646/cmpe646_project_backup_$date.tar /home/cmpe646/*/*.h* /home/cmpe646/*/*.c* /home/cmpe646/*/*.bench*

bzip2 /home/cmpe646/cmpe646_project_backup_$date.tar

exit 0

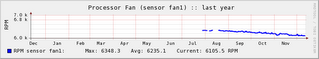

Blowing out Fans

What I get from these pictures is that the fan is now spinning faster like it was half a year ago and the processor also started running cooler after I blew out the fan.

The conclusion: The failure mode of computer case fans is to develop (physical and electrical) resistance and spin slower and slower. They should be kept clean to prolong the life of the fan and the components they cool, but don't use too much pressure--you don't want to break the assembly.

If you're using compressed air -- you can use about 80-100 PSI, but don't use anything bigger than a Sports Ball Inflator or you'll have too much air velocity. Also, if you don't have a filter or anything on your compressor, you have to be careful about debris! Don't hold the air canister upside down or anything that might stir up dust or debris inside the tank. For most people, it's probably best to go to the store and get a can of "air" for your exact purpose.

Sunday, November 19, 2006

Comparison of 20.1" LCD Monitors

I wanted to know which display would be better for desktop computing: a normal 1600x1200 pixel display or a widescreen 1680x1050 pixel (HDTV) display. The widescreen, would be more efficient for movies. I remember doing this once before, so I decided to write it out this time. To simplify my analysis, I chose to consider only 20.1" diagonal viewing areas, with the aforementioned resolutions.

For the widescreen, I calculate the display is 17" by 10.6", giving a viewing area of 181.6 square inches. For the 4:3 ratio display, I got 16" by 12" inches, and a viewing area of 193.9 square inches. This is a 6.7% bigger display area for the 4:3 ratio display. More generally, if the diagonal length is fixed, a square maximizes the area of a rectangle.

To compare the resolutions, the widescreen gives 1.76 megapixels, and the 4:3 ratio gives 1.92 megapixels. This is a 9.1% increase in pixel count for the 4:3 ratio display.

In conclusion, you win with the 1600x1200 20.1" non-widescreen display in terms of specifications, but they are generally more expensive (partly due to TFT manufacturing realities) but more compatible with video games (until recently). The wide screen, however, might be a more natural design because of our rectangular viewing perspectives.

Friday, November 03, 2006

Fedora Core 5 + IMAP Server + SquirrelMail

The mail from the Internet accounts could be copied to the IMAP server using Thunderbird, even if a delivery system (POP/SMTP) is not in place (for mail to get there otherwise). I installed SquirrelMail and tested this capability with Thunderbird already. I closed down sendmail, installed and started postfix. I set up the Cyrus IMAP, which was the hardest part of the IMAP/SquirrelMail setup.

The tutorials I found to help do this on Fedora Core 5 are:

http://cyrusimap.web.cmu.edu/imapd/install.html

http://nakedape.cc/info/Cyrus-IMAP-HOWTO/quickstart-fedora.html

http://www.howtoforge.com/perfect_setup_fedora_core_5

The last one shows how to set up SMTP, as does the one from Cyrus itself. I'm still trying to figure out if I can have my own SMTP and use my IP Address (or its alias) as a domain for sending mail to.

Even if I can't, I think that having the IMAP server in place, just for storing mail, would be a big help as the mail would be accessible from anywhere (even using SquirrelMail).

Thursday, November 02, 2006

PHP Gas Mileage Application

This program is a gas mileage database and analyzer written in PHP. It is self-contained (has a single source code file). It stores data in a simple text file in HTTP-query format. It allows users to add new records, and add new data files for different vehicles. It creates a backup before a new record is added. It also implements password protection before users can add refueling records.

Source Code:

Source Code

See it in action

Saturday, October 28, 2006

Simple Web File Upload Form

I put the following form and post processing files together from a manual on PHP. The HTML could really be embedded in the PHP file, but this might be better if you only want one copy of the PHP code on the system. Modify both to fit your environment and make sure the PHP file is executable.

Example .HEADER.html

Example getfile.php

This code is derived from a few examples.

Web File Search CGI Program for Linux

It has some thought put into security. The results are displayed as links to facilitate viewing/downloading the located file. It should be used to search one part of the file system. In my case, the public area is /srv/. This part of the filesystem should be linked into the Apache document root via

ln -s /srv /var/www/html/srvto allow the users access to the files with your HTTP server.

Following is the Perl-CGI code. Name it locate.cgi and it should go in your cgi-bin directory. Don't forget to chmod 755 the file so it's executable. Because of technical limitations of Blogger, I can't paste the Perl-CGI code here--please follow the following link:

http://www.cs.umbc.edu/~rhelins1/getfile.php?id=26

Thursday, October 26, 2006

Using the Same Thunderbird Storage Folder on Windows and Linux

Today I finally solved the problem of having multiple e-mail clients and multiple storage folders. First I used an IMAP account I have access to in order to move all the old e-mail I have on multiple computers onto the Thunderbird "Local Folders" in Linux on my server. This is as easy as copying all the messages in one Inbox to the Inbox on the IMAP account, and then on the other computer moving the messages out of the Inbox on the IMAP account to the local Inbox account. It's not the fastest, but it's the most compatible as Thunderbird doesn't support much importing from other e-mail storage folders.

So once I had everything on the "Local Folders" account in Thunderbird on Linux, I changed the Local Message Storage directory on Windows (not on the Server) to the storage directory in my home folder on my Linux server via a drive-mapped Samba share:

Y:\.thunderbird\ayrxordj.default\Mail\Local Folders

This can be found by going to Tools->Account Settings->Local Folders. Click Browse. You'll have to have "Show Hidden Files" ON to locate your Local Folders path with the GUI. You also need to make sure that all your e-mail accounts you have are set up to store the mail in the "Local Folders" rather than an individual folder for that account.

After some testing with no networking on the Windows computer, if the client doesn't have accesss to the network drive, you just get nothing under "Local Folders". In other words, it doesn't crash or anything.

So what does this mean? If I download a message on my Windows Thunderbird client, it is exactly like downloading it on the Linux Thunderbird client. It is the same for the Linux client (vice-versa). It also means that now I can have "Delete message from POP after download" set to "Yes" on both clients and avoid downloading e-mail that I've already seen.

Issues I can imagine running into include having both clients open simultaneously and possible Operating System dissimilarities in the implementation of Thunderbird. Also, it took a long time to open the gigantic storage folder the first time over the network, but cleaning up the 2-3 thousand messages and perhaps "compacting" the folders should help. The other issue is, since I merged the two clients, there are several duplicate e-mails, but there is an extension to find & delete duplicate messages which has already solved this problem for me. I removed all the duplicates (leaving me with only roughly two thirds of the messages, because this merge was so large) and compacting the folders did solve the long-to-load problem.

Now I can load up Thunderbird on Windows or Linux using the same "Local Folders" storage directory. Finally, no more getting on different computers/clients to find different mail.

There are still issues with using this technique. If you have a laptop or are losing connectivity, Windows will let you know that "Delayed Writing" failed. Also, a solution like having IMAP storage would be much more network-friendly in terms of bandwidth. See my post on setting up Cyrus IMAPD

Tuesday, October 24, 2006

Shell Script for Tunneling VNC over SSH

Replace {user} with your username on the remote machine, and the {WAN Address} with your public IP address on the remote machine. Replace the screen with the (single) number of the screen you use (1,2,3,...). Due to technical limitations, I can't use backslashes, so please make the command a one-liner. The ssh with the sleep argument needs to have a command after it that uses the forwarded port or it will close immediately.

#!/bin/sh

ssh -f -L 2590{screen}:127.0.0.1:590{screen}

{user}@{WAN Address} sleep 10;

vncviewer 127.0.0.1:2590{screen}:{screen};

exit

So an example script would look like:

#!/bin/sh

ssh -f -L 25901:127.0.0.1:5901 user@mysubnet.domain.com sleep 10;

vncviewer 127.0.0.1:25901:1;

exit

Shell Scripts for making links unique or sole copies

#!/bin/sh

# Check that link exists

ls "$1" > /dev/null

if test $? -eq 0

then

echo -n "File found. "

else

echo "Error: File not found."

exit 1

fi

linktarget=`find "$1" -printf "%l\0"`

linktargettype=`stat --format=%F "$linktarget"`

# Check that link target exists

if test -z "$linktarget"

then

echo "Error: Null link target, not a valid soft link."

exit 1

else

echo -n "Found soft link. "

fi

# Check that link target is NOT a directory

#if test "$linktargettype" = "directory"

# then

# echo "Link to directory, skipping."

# exit 0

# else

# echo "Not a directory."

#fi

# Remove soft link

echo -n "Unlinking $1... "

unlink "$1"

if test $? -eq 0

then

echo "Done."

else

echo "Error!"

exit 1

fi

# Replace soft link with unique copy

echo "Creating copy from $1"

echo -n " to $linktarget... "

cp -a "$linktarget" "$1"

if test $? -eq 0

then

echo "Done."

else

echo "Error!"

exit 1

fi

exit 0

Here's the same script for changing the link to the unique copy:

# Check that link exists

ls "$1" > /dev/null

if test $? -eq 0

then

echo -n "File found. "

else

echo "Error: File not found."

exit 1

fi

linktarget=`find "$1" -printf "%l\0"`

linktargettype=`stat --format=%F "$linktarget"`

# Check that link target exists

if test -z "$linktarget"

then

echo "Error: Null link target, not a valid soft link."

exit 1

else

echo -n "Found soft link. "

fi

# Check that link target is NOT a directory

#if test "$linktargettype" = "directory"

# then

# echo "Link to directory, skipping."

# exit 0

# else

# echo "Not a directory."

#fi

# Remove soft link

echo -n "Unlinking $1... "

unlink "$1"

if test $? -eq 0

then

echo "Done."

else

echo "Error!"

exit 1

fi

# Replace soft link with only copy

echo "Moving $1"

echo -n " to $linktarget... "

mv "$linktarget" "$1"

if test $? -eq 0

then

echo "Done."

else

echo "Error!"

exit 1

fi

exit 0

Convert Soft Links to Hard Links

It now handles soft links on different file systems properly.

#!/bin/sh

# soft2hard.sh by Ryan Helinski

# Replace a soft (symbolic) link with a hard one.

#

# $1 is name of soft link

# Returns 0 on success, 1 otherwise

#

# Example: To replace all the soft links in a particular directory:

# find ./ -type l | tr \\n \\0 | xargs -0 -n 1 soft2hard.sh

#

# finds all files under ./ of type link (l), replaces (tr) the newline

# characters with null characters and then pipes each filename one-by-one

# to soft2hard.sh

# Check that link exists

ls "$1" > /dev/null

if test $? -eq 0

then

echo -n "File found. "

else

echo "Error: File not found."

exit 1

fi

linktarget=`find "$1" -printf "%l\0"`

linktargettype=`stat --format=%F "$linktarget"`

# Check that link target exists

if test -z "$linktarget"

then

echo "Error: Null link target, not a soft link."

exit 1

else

echo -n "Found soft link. "

fi

# Check that link target is NOT a directory

if test "$linktargettype" = "directory"

then

echo "Link to directory, skipping."

exit 0

else

echo "Not a directory."

fi

# Remove soft link

echo -n "Unlinking $1... "

unlink "$1"

if test $? -eq 0

then

echo "Done."

else

echo "Error!"

exit 1

fi

# Replace with hard link

echo "Creating hard link from $1"

echo -n " to $linktarget... "

ln "$linktarget" "$1"

if test $? -eq 0

then

echo "Done."

else

echo "Error creating hard link, replacing soft link"

ln -s "$linktarget" "$1"

exit 1

fi

exit 0

Tuesday, October 10, 2006

GRUB Read Error

If you've ever gotten the following error:

Loading GRUB... Read error

It looks like the GRUB read error occurs not because I shut the system down abrubtly or modified the GRUB configuration incorrectly, but because I'm trying to boot the system up without a keyboard. So, for now, I'll just keep the keyboard and mouse plugged in, but I'd like to be able to boot without these eventually. Some settings in the BIOS (like enabling USB keyboard support) might let me get around this error.

Optimizing Multimedia and Backup Storage with Hard or Soft Links

I had md5 files generated for everything that was copied onto the server, so using the shell commands sort, grep, and uniq, I was able to clean up a lot of space from files that had been copied over twice as a result of using WinMerge to prepare for deleting the backups that were on one of the hard drives that went into the RAID.

I was about to start playing with FSlint, but by chance came across a perl program called dupseek. I was looking for a script which replaced one of two duplicate files with a link.

As far as I can tell, this is an excellent program with a well thought-out algorithm for finding duplicate files on a Unix system (based on personal experience, and what it says on their page), but more importantly to me, it has a function for creating Unix soft links in place of the duplicate files. Although it's text-mode, this is the best program I've used for dealing with duplicate files. And text-mode is just fine! This is a real life-saver because I don't want duplicates sitting on the file system, and I'd like to keep some files cross-referenced in directories. Also, removing duplicates in directories which are already backed up will make the copy on hard disk seem like it has fewer files than that on the CD.

Hard links would really be better (for me), because soft (symbolic) links in Unix probably raise some compatibility issues when, for instance, trying to but the directory on a CD. Unless interpreted correctly, these links are just files. With hard links, however, the same file system inode is just referenced by two different directories. Since the directories in question won't be changing, this wouldn't raise any issues (deletion, separation, etc.).

In fact, gnomebaker currently has a bug where soft links are dereferenced for computer the size of the CD image, but the link file itself if put onto the CD.

I was able to save less than 10 GB by realizing there were duplicate media files as a result of combining directories before the big move to the server RAID, and more than 2 GB by using dupseek.

http://www.pixelbeat.org/fslint/

http://www.beautylabs.net/software/dupseek.html

Notes:

You can use a text file, generated yourself or by filtering the report generated the “-b report” function of dupseek, which contains filenames to be removed. You can pipe these names to xargs which calls rm. This is useful in case files have been copied to more specific directories and many duplicates lie in a general directory (e.g. “downloads” vs. “singles”).

cat [name of report file] | grep “/Downloads/” | tr \\n \\0 | xargs -0 rm

Which accomplishes the task of filtering the report to only files which are in a path which includes

“/Downloads/”, replacing the new line characters with null characters, and xargs passes each of these lines to rm. This removes the duplicate files which are in the common directory (which you want to clean out, and not preserve). Also, this must be executed from the same directory the report file was created in reference to (to make the relative filenames match up). For safety, try replacing rm with ls before you do anything to make sure you're about to remove the right files:

cat [name of report file] | tr \\n \\0 | xargs -0 ls | less

Only after checking over this output, hit q and then run:

cat [name of report file] | tr \\n \\0 | xargs -0 rm

Note: A much safer plan is to use the interactive mode of dupseek, or use the FSlint GUI (takes into account hard links). The interactive mode of dupseek got too repetitive and so I just used it to identify duplicates in one case, but in general the interactive or batch mode is fine (be careful of the batch mode, unless you're running my version of dupseek).

In the end, dupseek is better for batch jobs (but I feel that only with my hard link modification) and FSLint is better for compatibility and running on directories like home folders where it is the case that you want to leave some unique files alone. Of course, a compressed file system would be a step better, but who has the (CPU) time for that?

Saturday, September 23, 2006

Making directory traversals more efficient

I wrote a script which takes less than a second to run, and which splits bulky folders up into 28 different subfolders folders. If there's a well-organized folder with more than 30-50 subfolders under it, then they're all the same type of subdirectory and they only differ in their name. More than thirty or fifty subfolders is too much for your users to digest at once, and it slows down traversing the file system. It takes longer for Linux to get all the files necessary to list all the subfolders, and if you're browsing with Apache, it might take a while to download the whole listing.

A very popular solution for this type of directory is to subdivide the collection of similar subfolders by their name. I wrote a script which does this based on the first character of the folder name. This way, you can divide a huge directory into chunks that make traversals more efficient for the computer and more responsive to your users (even over CIFS, FTP, NFS, etc.).

#!/bin/sh

mkdir /tmp/myindex

mkdir /tmp/myindex/0-9

mkdir /tmp/myindex/misc

mkdir /tmp/myindex/a

mkdir /tmp/myindex/b

mkdir /tmp/myindex/c

mkdir /tmp/myindex/d

mkdir /tmp/myindex/e

mkdir /tmp/myindex/f

mkdir /tmp/myindex/g

mkdir /tmp/myindex/h

mkdir /tmp/myindex/i

mkdir /tmp/myindex/j

mkdir /tmp/myindex/k

mkdir /tmp/myindex/l

mkdir /tmp/myindex/m

mkdir /tmp/myindex/n

mkdir /tmp/myindex/o

mkdir /tmp/myindex/p

mkdir /tmp/myindex/q

mkdir /tmp/myindex/r

mkdir /tmp/myindex/s

mkdir /tmp/myindex/t

mkdir /tmp/myindex/u

mkdir /tmp/myindex/v

mkdir /tmp/myindex/w

mkdir /tmp/myindex/x

mkdir /tmp/myindex/y

mkdir /tmp/myindex/z

mv ./[0-9]* /tmp/myindex/0-9/

mv ./[!0-9a-zA-Z]* /tmp/myindex/misc/

mv ./[Aa]* /tmp/myindex/a/

mv ./[Bb]* /tmp/myindex/b/

mv ./[Cc]* /tmp/myindex/c/

mv ./[Dd]* /tmp/myindex/d/

mv ./[Ee]* /tmp/myindex/e/

mv ./[Ff]* /tmp/myindex/f/

mv ./[Gg]* /tmp/myindex/g/

mv ./[Hh]* /tmp/myindex/h/

mv ./[Ii]* /tmp/myindex/i/

mv ./[Jj]* /tmp/myindex/j/

mv ./[Kk]* /tmp/myindex/k/

mv ./[Ll]* /tmp/myindex/l/

mv ./[Mm]* /tmp/myindex/m/

mv ./[Nn]* /tmp/myindex/n/

mv ./[Oo]* /tmp/myindex/o/

mv ./[Pp]* /tmp/myindex/p/

mv ./[Qq]* /tmp/myindex/q/

mv ./[Rr]* /tmp/myindex/r/

mv ./[Ss]* /tmp/myindex/s/

mv ./[Tt]* /tmp/myindex/t/

mv ./[Uu]* /tmp/myindex/u/

mv ./[Vv]* /tmp/myindex/v/

mv ./[Ww]* /tmp/myindex/w/

mv ./[Xx]* /tmp/myindex/x/

mv ./[Yy]* /tmp/myindex/y/

mv ./[Zz]* /tmp/myindex/z/

mv /tmp/myindex/* ./

rmdir /tmp/myindex

exit 0

Some 'for' loops would make this code substantially smaller, and if someone makes a suggestion I'll try it.

Compiling the vt1211.ko Kernel Module

I basically followed the directions from VIA, except I used the latest patch file for kernel 2.6.17 available at Lars Ekman's website: http://hem.bredband.net/ekmlar/vt1211.html instead of the one VIA provides in their VIA FC5 Hardware Monitor Application Notes (www.viaarena.com). The patch file is designed to patch the whole kernel tree (only a small part, but you need the whole thing). If you have 2.6.17-1.2174_FC5, the module I compiled is already available on Lars Ekman's website.

Below is a copy & paste of the instructions from VIA, available on VIA Arena, with annotations made where I remember their instructions didn't work. Fill in the mentioned kernel name with your kernel. If I ever have to do this again, I'll make my own instructions.

I got the corresponding kernel source package from YUM instead of downloading the RPM. The package is called kernel-devel. This puts files in /usr/src/redhat. Note that when you're done, you can use YUM to again remove this package. I had to do a few things to get rpmbuild installed and working (I remember it needed a few software packages from YUM), but then I could make it through the first few lines.

#rpm –ivh kernel-2.6.15-1.2054_FC5.src.rpm

#cd /usr/src/redhat/SPECS

#rpmbuild –bp –-target=i686 kernel-2.6.spec

#cd /usr/src/redhat/BUILD

Then they want you to move the kernel source tree to /usr/src/{name of kernel} and then apply the patch.

You can find the kernel source directory in path

/usr/src/redhat/BUILD/kernel-2.6.15/linux-2.6.15.i686. Move the

kernel source directory to path /usr/src/kernels and patch

the os default kernel.

#cd /usr/src/redhat/BUILD/kernel-2.6.15

#mv linux-2.6.15.i686 /usr/src/linux-2.6.15-1.2054_FC5

#cp vt1211_FC5.patch /usr/src

#cd /usr/src

#patch –p0

Now you have the new kernel tree, and you need to compile the kernel with the vt1211 source as a module to get the vt1211.ko.

Select needed item and rebuild the patched kernel

Edit the Makefile in directory linux-2.6.15-1.2054_FC5.Find the string

“EXTRAVERSION = -prep” and modify it to “EXTRAVERSION = -1.2054_FC5”.

#cd linux-2.6.15-1.2054_FC5

#cp /boot/config-2.6.15-1.2054_FC5 .config

#make menuconfig

Device Drivers ---> Hardware Monitoring support --->

[M] Hardware Monitoring support

[M] VT1211

After set the kernel item completely and save it, we can rebuild the kernel

source. When the compiling module procedure is completed, you can find

the “vt1211.ko” module to path

/lib/modules/2.6.15-1.2054_FC5/kernel/drivers/hwmon

#make

#cp linux-2.6.15-1.2054_FC5/drivers/hwmon/vt1211.ko

/lib/modules/2.6.15-1.2054_FC5/kernel/drivers/hwmon

#depmod -a

The GUI menuconfig didn't work for me. If you run make config, you can do the same thing in text mode. If you just run make, I think it will ask you if you want Kernel/Module whenever not specified by the .config file you copied from your boot folder. Make sure you select Module (type the letter 'm') for the vt1211 objects. Then, about six hours later, it'll finally make it through all the source and you'll get your .ko file. Copy it out of there and into your /lib/modules tree and then run depmod as in the instructions above.

When you're done, you can go back to the /usr/src/{linux kernel name} and run make clean, or you can remove that whole directory if you no longer need it. You can also remove the kernel-devel package, rpmbuild, etc.

Linux kernel 2.6.21 now includes an even better version of the vt1211 module! This kernel is the default on RedHat Fedora 7 Linux. Finally time to upgrade my server installation.

Monday, September 18, 2006

Create md5 sum files in Linux

Learned that the Linux shell can be quickly used to generate md5 checksums of an entire directory to an md5 file, the command is of the form:

cd [directory to generate check sums for]

find ./ -printf “%p\0” | xargs -0 -n 1 md5sum > ./checksums.md5

Then, to check the files in a new location (e.g. on a CD-ROM), use the command:

md5sum -c ./checksums.md5

Or there are other programs for validating the files with the checksums, like wxChecksums which is available for Windows.

I wanted this because the graphical wxChecksums was too difficult to compile, and didn't seem worth the effort. Although I'm sure a couple backwards-compatibility packages for gcc would fix this problem, the command-line method makes it straightforward for doing multiple check sums in a single command in text mode.

Sunday, September 17, 2006

Python BitTorrent Daemon

If you're running a server and leeching/seeding torrents, and it's a no-screen machine, you might not want to use a BitTorrent client with an interface. The main reason is, the interface requires a lot of CPU cycles to constantly update and if you're not even looking, why bother? Azureus is the most powerful BitTorrent client, but it's also the most CPU-intensive.

On my Mini-ITX server, Azureus running on a VNC Server (xvnc) left the kernel 60% or less idle at times when the GUI was open or when traffic was heavy (30-40% cycles given to Azureus), and Azureus is also known for hogging memory (weakness of Java). After I switched to just letting btseed run, the total CPU usage is about 8% (92% idle). This means that the kernel is more responsive to new tasks.

A Python BitTorrent service (Daemon) either comes with Fedora or is part of the packages installed as dependencies of the YUM bittorrent-gui package. There is a Daemon set up called btseed, which is capable of monitoring .torrent files in a specified directory and downloading them automatically. There's essentially no documentation I can find about how to use this correctly, but I got it working OK.

The /etc/init.d/btseed script should be used to start/stop/restart the Daemon. This script launches btseed, which is an incarnation of the launchmany-console Python program. The file /etc/sysconfig/bittorrent should be configured to your preferences.

The Daemon is capable of UPNP, which makes it pretty nice. Here is my /etc/sysconfig/bittorrent configuration file:

SEEDDIR=/srv/bittorrent/data

SEEDOPTS="--max_upload_rate 50 --display_interval 30 --minport 49900 --maxport 52000"

SEEDLOG=/var/log/bittorrent/btseed.log

TRACKPORT=6969

TRACKDIR=/srv/bittorrent/data

TRACKSTATEFILE=/srv/bittorrent/state/bttrack

TRACKLOG=/var/log/bittorrent/bttrack.log

TRACKOPTS="--min_time_between_log_flushes 4.0 --show_names 1 --hupmonitor 1"

Note that the TRACK* settings are not important unless you're running a tracker.

Get a free domain name (IP alias) for your subnet

DynDNS.com provides a free IP Address Alias for everyone. The only problem is, I have a dynamic IP address, so I had to also get ddclient (for Linux) working. It was pretty simple—a package was available on YUM, and it installs itself by default as a daemon (service). All you need to do is set up the /etc/ddclient.conf file to work correctly. You need to do two things with the ddclient: discover your IP address, and check with DynDNS. I basically just had to uncomment some lines and fill in the details. DynDNS has a facility for giving you your WAN IP address. Your router does too, but it may be too hard to find.